Dynamic assessment of hard-to-measure skills

Can we assess redrafting?

We’ve been running Comparative Judgement assessments of writing for nearly a decade now.

When we first began, back in 2016, we asked schools to submit a portfolio of their students’ writing. We also said that students could rework and redraft the submitted writing.

The problem with this approach was that we would sometimes get schools submitting 60 portfolios from 60 different students that were all incredibly similar. The students had had so much scaffolded feedback on their work and so much heavily structured guidance on how to redraft that they had all ended up creating very similar pieces of writing.

In both educational & assessment terms, this was a problem. The reason why we set open-ended and authentic tasks like extended writing is because we want students to respond in creative and unexpected ways. If you want to set an assessment where you have a very clear and specific idea of what the correct answer is, you are better off using closed questions than extended writing. You also don’t need Comparative Judgement, which is designed to assess holistic quality, not whether students have ticked off items on a list.

From 2018 onwards, we therefore changed our assessment and asked schools to get students to complete their writing in independent conditions, with no opportunity for feedback and redrafting. We have assessed nearly two million pieces of student writing using this approach, and it’s resulted in some fantastically original and entertaining pieces of writing.

But what about redrafting?

This approach works very well, but we still understandably get questions about redrafting and editing. Teachers will tell us that redrafting, editing and responding to feedback are genuine authentic skills that matter, that should be taught, and that should be assessed too.

Up until now, my response has always been to agree on the value of these skills, but to say that the technical & workload challenges in assessing them are so great that these are perhaps skills we should teach and include in the curriculum & lesson plans, but avoid attempting to assess formally.

However, we are now able to use a mix of new AI technology and established psychometric techniques to develop a new kind of dynamic assessment which can address this issue.

What is Dynamic Testing?

Dynamic Testing allows pupils to receive some form of guidance during an initial testing period, and attempts to measure learning gains that directly result from the guidance.

One form of Dynamic Testing that many people will familiar with is the nudges and hints provided in online gamified assessments like Duolingo or Khan Academy. This is where you are given a question, and then if you struggle are given a staged series of hints and suggestions.

For example, suppose you get the question: What is 58 + 76?

A dynamic test would give you the chance to ask for a hint about where to start, and perhaps the hint would be:

“Try adding the ones place first: What is 8 + 6?”

If you're still stuck, it might follow up with:

“8 + 6 = 14. Write down the 4 and carry the 1 to the tens column.”

This form of dynamic testing is known as the “cake” format, as there are different layers of hints in the way that you get different layers of cake.

There is an alternative form of dynamic testing known as the “sandwich” format. This involves a pre-test, followed by tailored instruction, and then a post-test.

We are planning to run an assessment of Y6 redrafting skills using this sandwich format. Here is how it will work.

Pre-test: Students complete their writing in standardised conditions without assistance as part of our national projects. It is assessed using human teachers making Comparative Judgements. This assessment has already taken place - it was our annual Comparative Judgement Year 6 assessment which takes place in February / March.

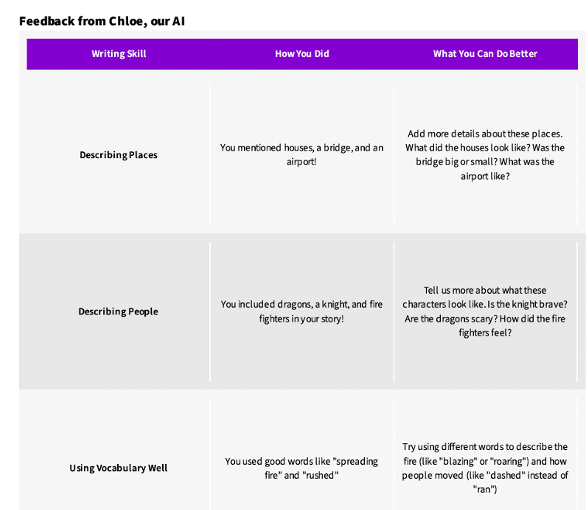

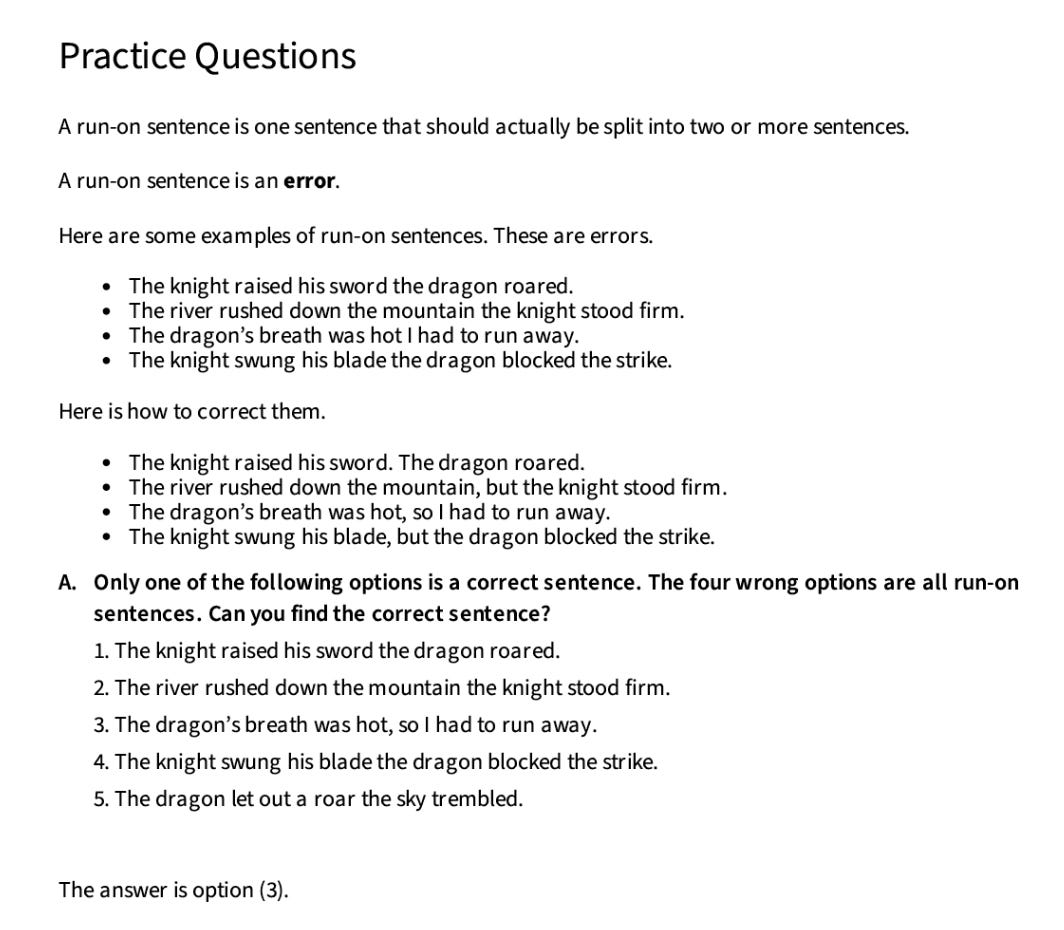

Tailored instruction: Students receive an automated and personalised workbook with four kinds of feedback: a standardised score produced by human teachers using the Comparative Judgement process; a paragraph of written feedback that is spoken by human teachers and processed by AI; a checklist of writing features directly generated by AI; and a series of multiple choice questions on an aspect of writing, introduced by a short instructional resource. The exact set of multiple-choice questions they receive will depend on their standardised score. You can read more about the feedback available here.

Post-test redraft: The students rewrite their narrative and it is assessed again using Comparative Judgement. For this assessment, we will allow schools to choose to use AI judgements to supplement or replace human teacher judgement, as this will reduce the workload for teachers.

Here are some of the resources from the tailored instruction booklet.

Our hope is that the combination of feedback and targeted instruction will increase students’ motivation to re-draft and provide them with useful guidance that will help them improve their work. We’ll also be able to see if the improvement in student scores gives us a reliable measure of their redrafting skill and the effectiveness of their response to feedback.

Important research considerations

How comparable are the pre-test and post-test scores? In one sense, the scores on the pre-test and post-test will be comparable. We will place them on the same anchored scale, and we want to see how much improvement each individual student makes from the pre-test to the post-test. However, there is another sense in which the scores will not be comparable. We expect that most students will get a better score on the redraft than on the pre-test. It would not be fair to compare one student’s higher score on the redraft with another’s lower on the pre-test, and conclude that the first student is doing better. In the same way, if we were using the cake format of dynamic testing, it would not be fair to compare the score of a student who didn’t ask for any hints with one who asked for lots.

What conditions will the redraft be completed in? We want to guard against the problem we saw a few years back, where students ended up submitting very similar pieces of work. We are not conceptualising the skill of redrafting as something that has just one correct end goal. We are conceptualising of it as something more like the original process of writing, as something more authentic and creative and therefore open to different types of response.

What if students improve their work, but not their thinking? We have written extensively about how one major problem with feedback is that it can result in students focussing solely on improvements to the piece of work in question that do not update their mental models and therefore do not translate to future similar pieces of writing. We have aimed to guard against this with the inclusion of multiple choice questions that attempt to provoke a change in student’s thinking – but will this be enough? We’d have to track the students’ writing again, on the pre-test of the following year’s assessment, to see if their improvements on the redraft have been consolidated and can be reproduced on a new piece of work.

How to take part

We are making the post-test assessment available for free to any school in England & Wales, even if they did not take part in our pre-test. You can register for it now here and read more details here.

Schools who took part in our original Year 6 assessment and therefore received our feedback reports can use those resources with students to help them redraft.

Schools who did not take part in the original Year 6 assessment can get students to write their response and redraft it using their own resources.

Further Reading on Dynamic Testing

van Graafeiland, N., Veerbeek, J., Janssen, B., & Vogelaar, B. (2023). Discovering Learning Potential in Secondary Education Using a Dynamic Screening Instrument. Education Sciences, 13(4), 365. https://doi.org/10.3390/educsci13040365

Vreeze, M.G.J. de (2024). Cognitive training and dynamic testing in the school domains: a glimpse on primary school children's potential for learning. https://hdl.handle.net/1887/4098055

Yes, absolutely, you can assess redrafting, revising, and editing. The whole process hinges on time and class size in order to do it well. And AI and peer-tp-peer assessment and data can help with the process. I'll try and circle back to a more detailed response. Thanks for keeping this important conversation going.