What kind of student feedback do teachers think is best?

The tension between personalised and in-person education

Over the last year, we have added many different types of AI-enhanced student feedback into our Comparative Judgement writing assessments. AI has made it incredibly quick and easy to create feedback that would typically have taken hours of teacher time.

However, whether the feedback is generated by AI or by humans, we need to be sure it is useful, and there are long-standing concerns about whether any form of written feedback is useful.

(This is a problem with the evaluation of AI more generally. We evaluate AI by seeing if it can reproduce something that professionals currently spend a lot of time on. We don’t ask whether the thing the professionals are spending time on is actually valuable.)

Here are the questions we need to ask to decide whether any form of writing feedback is useful.

Do students understand what this feedback means and what action they need to take to improve?

If students follow the advice given in this feedback, will it make them better writers?

Is the feedback helping to improve their overall writing skills, or just improving the specific piece of work?

Fortunately, AI can help us assess these questions more speedily too. We have now integrated AI judges into our Comparative Judgement assessment process, making it quicker and less burdensome to run follow-up assessments measuring student progress. Our first attempt at this, CJ Dynamo, showed that on average students made good progress in response to feedback, but a significant minority went backwards.

What do teachers think of it all?

As well as hard assessment data on student improvement, we also want to know what teachers and students think about our new reports.

So far, our teachers have told us that the report they find the most useful is the teacher report, consisting of personalised information on every student designed for teachers. There are three elements in the report: data, AI feedback and the student writing. They prefer this to the student report, which is similar but doesn’t have data and has simplified AI feedback.

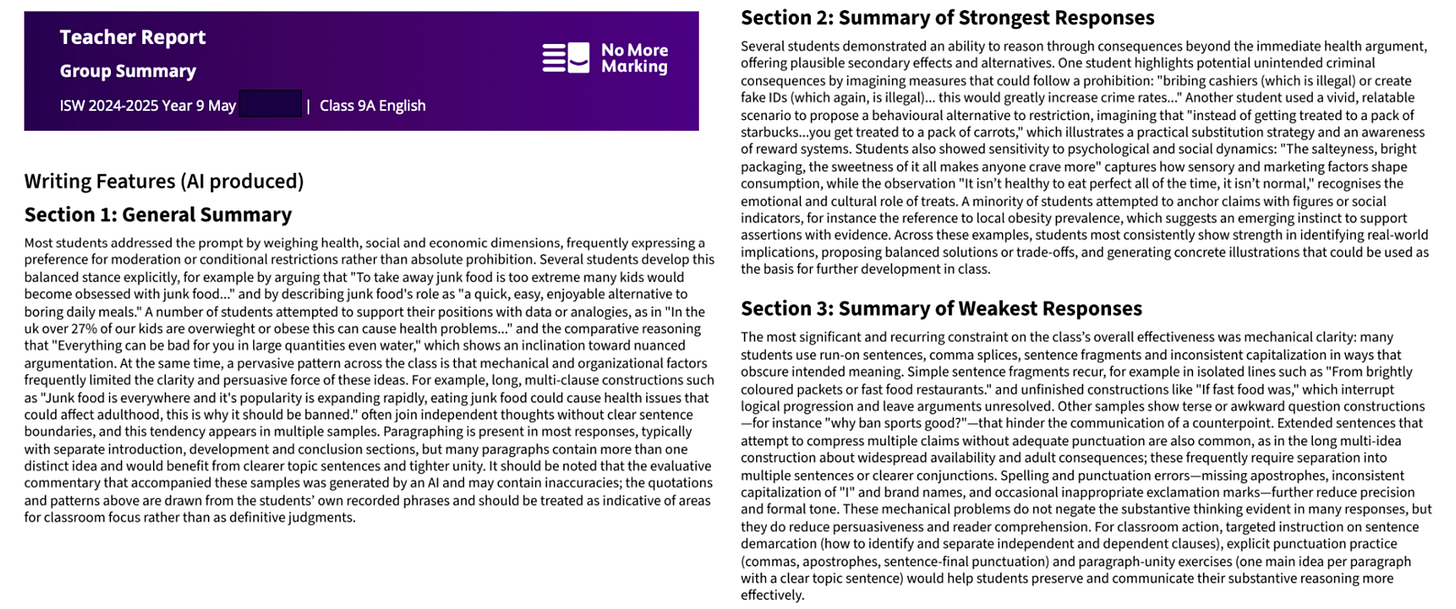

The most-requested feature from teachers has been an AI-generated year group summary of all this personalised feedback. We’ve developed that, and you can see an example of what it looks like here. It’s kind of like an examiner’s report, but just for your students.

Why do teachers want this feedback?

So, teachers seem to prefer our teacher report to our student report, and their most-requested feature is a class / year group summary. That suggests to us that they don’t want to give feedback on writing directly to students. Instead, what they want is to mediate the feedback via whole-class instruction.

This has been a trend in English schools since before the development of Large Language Models. We have also written extensively about the value of whole-class feedback compared to traditional written comments, so to that extent we are pleased to see teachers recognising this.

Would students be better off with personalised feedback?

One of the long-standing criticisms of whole-class feedback is that it isn’t personalised. Generally speaking, a teacher will design whole-class feedback to focus on the most common errors they see in the class, and AI-generated whole-class feedback does something similar. Students who make rare errors, or no errors, or more than average errors, will not be getting ideal feedback. There are some ways of to mitigate this problem, but it would be unrealistic to pretend it can ever be completely solved.

However, it’s also fair to accept that this is not just a limitation of whole-class feedback. It is a fundamental limitation of the traditional human classroom itself. One teacher cannot realistically personalise instruction for 20 or 30 students.

One of the major arguments in favour of education technology has been that it can solve this problem and provide tailored instruction for every student. The development of Large Language Models has only increased the momentum for personalised learning.

So you might be thinking that it is a bit backward of us, and our teachers, to be using LLMs to provide something that isn’t personalised - that is just a summary of where a class are. Surely they would be better off with the personalised LLM feedback?

Not necessarily. The reality is that our current education system is based around in-person physical schools and classrooms, led by human teachers. There is a good reason for that: we saw in the pandemic that however amazing your online learning platform, it cannot provide the structure, routine, discipline and community of an in-person classroom.

And those in-person virtues create constraints. A teacher generally does want their students to be working on the same concepts and moving at roughly the same pace. If they are all doing their own thing at their own pace, it is hard to keep those valuable structures and routines together.

The great challenge for modern education technology is to find ways of integrating technology and the classroom: to resolve the tensions between the personalised and the in-person. Technology’s ability to personalise instruction is genuinely amazing, and we need it. But the human-scale screen-free community of the traditional classroom is also amazing, and we need that too. There are limits to how much students will learn on a screen and on their own.

It’s counter-intuitive, but using LLMs to generate non-personalised feedback might be more effective than you first think.

Thanks for the article. When I read this section,

"Here are the questions we need to ask to decide whether any form of writing feedback is useful.

Do students understand what this feedback means and what action they need to take to improve?

If students follow the advice given in this feedback, will it make them better writers?

Is the feedback helping to improve their overall writing skills, or just improving the specific piece of work?

I wondered how teachers would react if the options applied to them. As a head and when working for local authorities I frequently observed other teachers and usually this involved giving feedback. I don't believe the observed teachers were ever asked how they'd prefer the feedback, i. e. face to face or in writing. Usually it was face to face first with a write up later. I appreciate observing one teacher is different from 30 students. If you're giving a tricky message because the lesson didn't go well in my experience, most teachers were not receptive to any type of feedback. Do students feel the same I wonder. My class teacher experience was mainly at primary age and written comments were mostly ignored in favour of a mark or whatever icon the teacher used to express the equivalent of 'well done' or ' this is a bit disappointing'.

A longtime habit of mine when I worked too hard as a senior English teacher was to write vast quantities of individual feedback, and then anonymise and collate them and post them to the whole class (i.e. add them to all my other all-class advice posts). Admittedly, that's different to what you explore above, because it means my students sometimes got to read lots of personalised advice that was NOT addressed to them. I did this because (a) when applied to, say, writing skills, it both anticipates future and reinforces previous feedback that students will need or have needed; and (b) because substantive content advice in English Literature teaching is 3/4 of the work if you want your students to really know a text. For example, if a student essay on Henry V raises an interpretive point that prompts a useful reply from me, then that reply can benefit many students, not just the student who inspired it.