Can ChatGPT provide feedback? Our latest research

And how to use our new automated feedback system

Back in January we wrote two posts asking if ChatGPT could provide useful feedback to students. We could see that ChatGPT was capable of providing fluent prose paragraphs about the strengths and weaknesses of an essay. In the first post, we outlined our concern that this model of feedback itself is flawed. In the second, we concluded that if you could get the AI to be as specific as possible about how the student could improve, that it might be helpful.

Since then we’ve integrated GPT-3 into our judging website so that it can provide marks for essays. Unfortunately, we’ve found it is not great at providing marks for writing, as we’ve written about here and here.

In this post, we’ll review how good it is at providing feedback. We have a separate part of our website that automates this process too, and we feel that it is better at providing feedback than it is at providing marks.

We experimented with some different prompts, and the one we have settled on is this.

What would you grade this essay and why? Please also give one specific example of how to improve this essay and one specific example of a spelling or grammatical error that should be corrected.

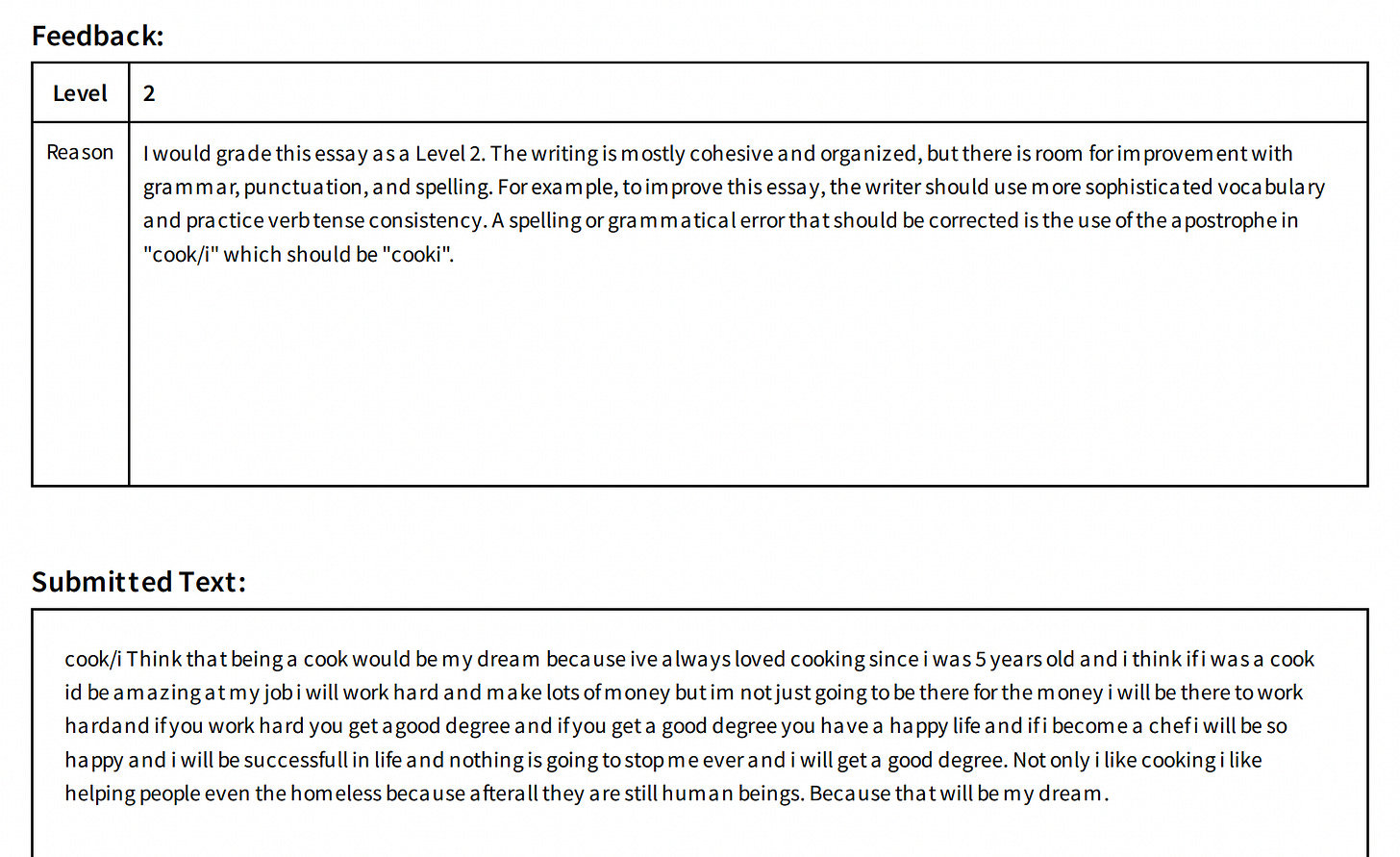

Sometimes this works relatively well, and the AI structures its response into three clear parts that respond to the three clear things we’ve asked it to do. Here’s one of the better examples.

If the aim of feedback is to improve the students’ thinking, then I don’t think this feedback is perfect and it definitely has flaws. But all those flaws have nothing to do with AI: they are the result of the very model of written feedback, which is limited in what it can achieve.

I’ve written about this at length here, but I will reiterate briefly: the problems with written feedback (whoever writes it!) are (1) that it is too vague - it is true but useless. In the above, the advice to ‘provide more detail’ is a great example of this. And then problem (2) is that when you do make the feedback more specific, the risk is that it focusses on improving the work, not the students’ thinking. The specific advice here to ‘include more reasons why the job of a police officer is ideal’ is a good example of this. It would definitely improve this essay, but it would not address the reasons why the student didn’t include reasons in the first place, or help them to include better reasons in the next essay they write on a different topic.

However, I want to stress here that this is not a criticism of GPT. As written feedback goes, this is probably about as good as it gets. I’m not sure a teacher would be able to write something much better, even if they were given unlimited time - and of course teachers don’t have unlimited time, and writing something like the paragraphs above is incredibly time-consuming to do for an entire class.

I think my preferred option would be simply to get rid of written feedback completely, and for teachers to focus on providing more useful feedback in the form of a follow-up lesson, a tweaked curriculum sequence, or a series of questions designed to work out why the student is making certain errors.

However, that’s a fairly idealistic position to take, and there are plenty of systems where written feedback is necessary, and where the time it takes to provide it probably takes teachers away from the more useful work.

So perhaps if ChatGPT can do the time consuming job of providing provide written feedback like this - accurate, personalised, not completely unhelpful - it would free teachers up to spend time thinking about the classroom activities that would really help students improve.

So our conclusion is that if ChatGPT could produce feedback like this at scale, it would be pretty useful - not transformative, but useful.

However, not all the feedback it provides is like this. On other occasions it doesn’t provide concrete suggestions and stays very generic and brief.

Sometimes it doesn’t identify a specific grammatical error, even though we asked it to.

Sometimes it just makes mistakes!

So it is not reliable enough yet to be used without some form of teacher oversight. We have made the feedback editable, so you can easily edit what GPT has provided, save your changes and print off a PDF with your edited feedback on the same page as the student response that you can then return to each student.

If teachers have to make these changes and additions, will it still be useful and time-saving? Is there a risk we are persisting with a model that is intrinsically flawed rather than moving to a completely different one like whole-class feedback? Maybe. Ultimately we are not the best judges of that - our teachers are.

So we are opening up this facility for our subscriber schools to trial in June & July, so we can get some feedback about whether it is worth persisting with. You can choose to use our prompt or to enter your own. (For those people who have been asking us about our prompts and who are convinced that if only you tweak the prompt, you will get great results at scale - well, here is your chance!)

We will be running a webinar on Tuesday 23rd May at 4pm where we will explain how the new website works. Anyone can attend but only subscribers will be able to use the website. You can register for it here.