How does comparative judgement compare with multiple choice in assessing maths?

This post is part of a series outlining No More Marking’s initial findings from a mathematics research project being carried out jointly with Axiom Maths in 2023–2024. You can see the initial posts on the multiple choice and the comparative judgement assessments here and here respectively.

As part of the project, Year 7 students took part in a comparative judgement assessment of mathematical reasoning skills, and a more traditional multiple-choice assessment of maths knowledge. We matched the comparative judgement scores of each student with their multiple-choice scores, the scores being matched for 1667 students. Figure 1 provides a scatter plot of these matched scores.

The correlation between the two sets of scores was 0.46 showing a clear relationship between the two assessments, but also that they were clearly not duplicating each other.

We were curious about the four outliers — those highlighted in the top-left where students did well in the comparative judgement but relatively less well in the multiple-choice, and those in the bottom-right who did well in the multiple choice but less well in the comparative judgement.

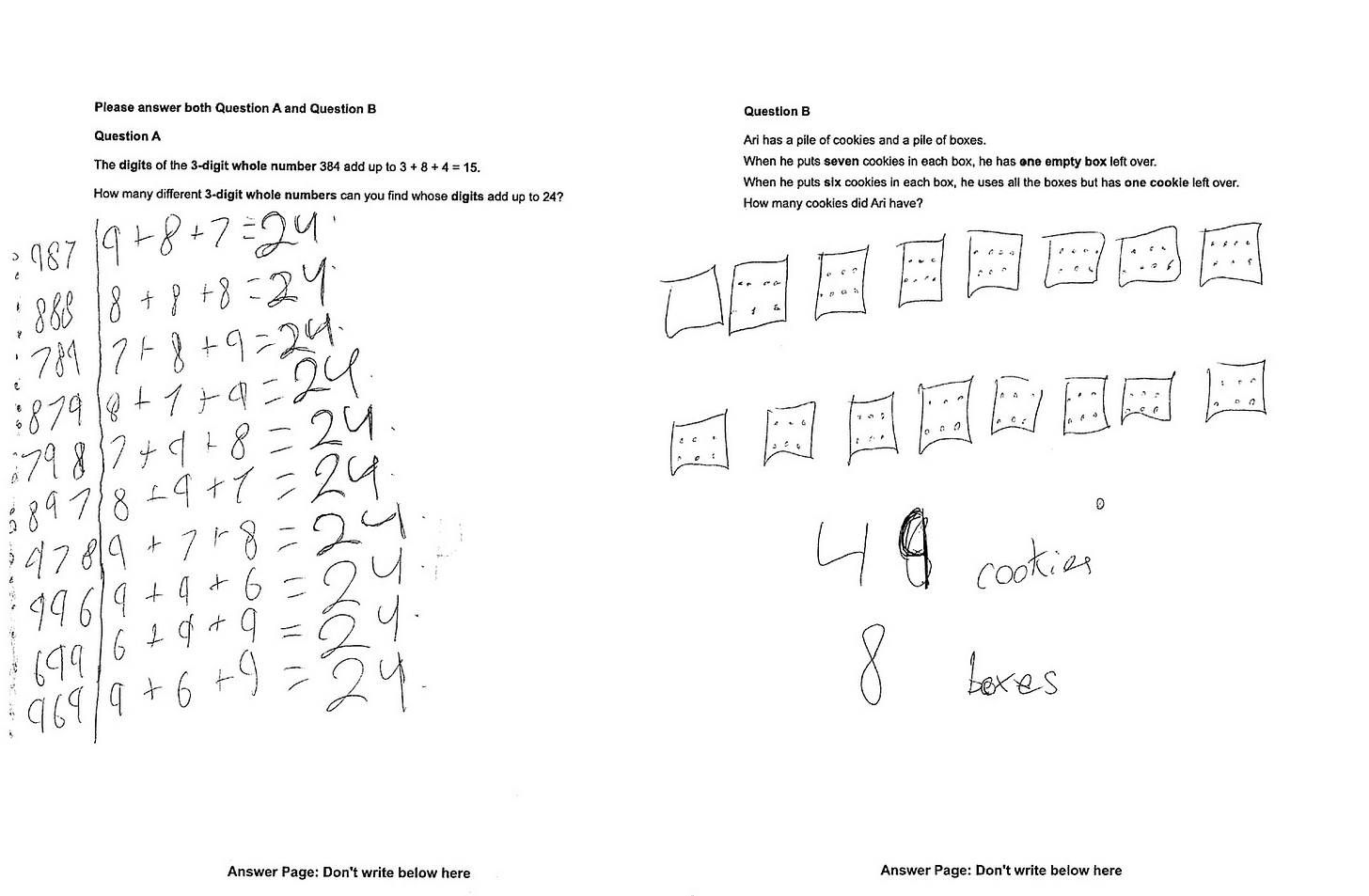

Here are the top-left students’ comparative judgement responses in Figures 2 and 3, and their multiple choice answer sheets in Figure 4:

The students realised the possible combinations for Question A, and were able to to try different combinations and used picture representations for Question B. However, when we looked at the multiple-choice answer sheets, they both seemed overwhelmed by the assessment. The first student had put crosses everywhere and only answered the first five questions. The second student answered all the questions but got very few correct. Interestingly, when we asked for a possible reason for the first student from the school assessment coordinator, this is what they replied:

He did not listen to the instructions and completed the form incorrectly. His teacher said "He filled out the multiple choice incorrectly. He just filled in nearly every box in the first few lines."

Looking at the bottom right students’ comparative judgement responses:

They in turn seemed to be overwhelmed by the open-ended nature of the problem-solving tasks. Again, interestingly, when we asked for a possible reason from the school assessment coordinator about one of the students, this is what they replied:

I have spoken to his teacher and apparently he is pretty good with simple calculations but then asks a lot of questions when the context changes. He may just struggle with the problem solving side of things and therefore couldn't interpret what to do for the judgement problems.

What these contrasting groups of students show is that the nature of the assessment seemed to impact on their scores. We might say that using just one type of maths assessment may not give the full picture of a student’s ability in mathematics.

Thanks for sharing your work :-)

Did the participants complete equivalent or identical questions in both formats (MCQ & open answer)?

If so, could you share the MCQ distractors for these questions?