Using AI to automate written comments

Verbal feedback given!

Over the last couple of years, we've used this Substack to document our experiments with using artificial intelligence to mark student work and provide feedback on it. (See, for example, Can Chat GPT mark writing?, Can ChatGPT provide useful feedback?, More GPT marking data)

As you will see from these posts, we're relatively sceptical about the idea that AI can replace human assessors. Our major issue is that new artificially intelligent Large Language Models make a lot of mistakes - so-called "hallucinations" - which mean that it is hard to trust their outputs without significant human oversight.

However, after quite a bit of experimenting, we have developed a tool which we think plays to the strengths of humans and AI and provides both students and teachers with useful and reliable information.

Here's how it works.

Teachers take part in a Comparative Judgement assessment as normal.

When they judge each student response, they can record a verbal comment with feedback for the student.

In total, each student response will receive several verbal comments from several different teachers.

We then use AI in two ways: first to transcribe all the verbal comments, and then to combine them together to provide a paragraph of written feedback for each student, and a booklet full of class and year group feedback for the teacher.

The major difference between this approach and approaches that we and others have tried before is that the AI is no longer responsible for creating the feedback. The teachers create the feedback. The AI does the admin of transcribing it, combining it, and polishing it.

What we've tried to do is to find the most efficient means possible of gathering the type of human insight that AI finds difficult, and then used the AI to do the more mundane administrative tasks that it is better at.

It does take teachers a bit more time to provide the verbal comments - but nothing like as much time as it would take to write them all out. The difference between the average time taken to judge without comments and with them is just 5 minutes. For that extra 5 minutes, students get written comments and the teachers also get a detailed whole class feedback report based on insights from all the teachers in their school.

In our most recent Year 5 assessment, 31 schools trialled the approach. In total 204 teachers left 5,321 comments. In the green box below is an example of the feedback for students.

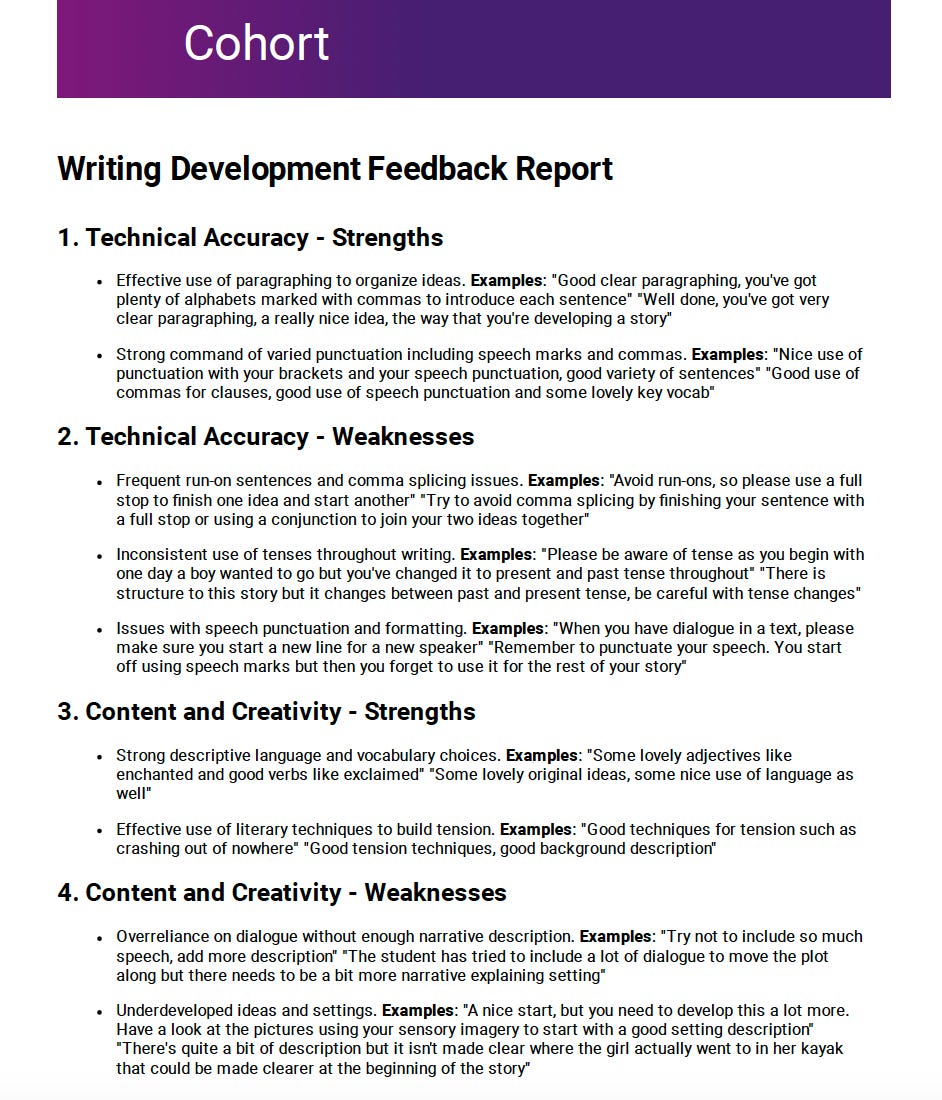

And here's an example of the whole-class feedback.

The feedback on the feedback has been very positive.

We are now making the system available for larger trials. In January and February, subscribers to our UK primary project can use it for Year 1 and Year 4 writing, and subscribers to our US project can use it for their mid-year assessments.

After those trials, we'll consider opening it up more widely.

We have a help page and videos showing the new system here, and we have a webinar on Monday 20th January at 4pm where we will explain exactly how it works.

I think the idea of each teacher getting an overview of other teachers' comments on a class is interesting. The example of written feedback on a Year 5 child's piece of work puzzled me. If this feedback is given to the child to read and process I'm not sure it would be that effective. Judging from the quality of the child's writing I wasn't convinced he/she would completely understand the feedback let alone be able to act on it. In my experience much of the written feedback that appears on primary phase children’s work is there to show accountability. I can recall reading extensive comments on FS2 work and wondering for whose benefit it was actually there. The head's, parents', Ofsted. Definitely not the child's. I'm as guilty as the next teacher of spending significant amounts of time writing what I thought was meaningful advice and uplifting encouragement. I think what needs time spent is going back over a piece of work as a class and focusing on the aggregated strengths and weaknesses. I'm unsure as to whether that would be the intention of this tool or not.

thanks for this Daisy, very interesting and smart application of LLMs. I'm interested in the actual output of the feedback, and wondering how it would be used in the classroom or to drive improvement. for example, where the AI gives the feedback it appears to

Categorise the feedback by area and if it is a strength/weakness

Summarise the feedback

Give an example of where a teacher has given that feedback

What it doesn't do is say what it is the student actually wrote that merits that feedback. So if, for example, I wanted to see a specific case of a student "over-relying on dialogue without enough narrative description" I would have to go looking through transcripts for that. As a teacher then, I'm not 100% sure how to deliver this feedback.