Updates from our AI assessment projects

Three new findings

This term, we’ve been busy turning out results and analysis for all of our big Comparative Judgement national assessment projects.

The Comparative Judgement plus AI model, which we developed earlier this year and trialled in March, is now available as standard for all of our national & bespoke assessments. We have now assessed over 200,000 pieces of writing using this model and we have just completed our first national history assessment.

Here are three new things we’ve learned from this term’s assessments.

AI Comparative Judgement delivers very similar results to human Comparative Judgement - it’s just quicker!

We’ve written extensively about the high agreement rates we see between our human & AI judges.

We now have some different data points showing something similar.

For the Year 3 writing assessment that we ran this term, almost half of our schools chose to use AI judges, and the rest chose not to. This means we can compare the results of each sub-group and see if there are any discrepancies.

What we found was that the two groups were very similar. The overall means of each group were exactly the same: 493. The standard deviation for the AI-judged group was slightly smaller - 39 compared to 46. This means there were fewer very high and very low scores in the AI-judged group. We are not totally sure why this is, but it is not a huge difference.

AI is better at Comparative Judgement than absolute judgement

There are a lot of organisations out there doing AI marking. Most of them get the AI to do traditional marking, which is a form of absolute judgement. You are asking the AI to look at one piece of writing and place it on an absolute scale.

We trialled this approach in the past and moved away from it for several reasons, the most important of which was that the AI just wasn’t very good at it.1

One way we can validate the scores from any assessment is to see if they help you predict the scores the same students got on other assessments of the same construct. We have used this method to validate our human Comparative Judgement assessments over the last few years and we routinely see 0.7+ correlation between student scores on one assessment and the next. Using the AI to make absolute judgements, we saw only a 0.5 correlation.

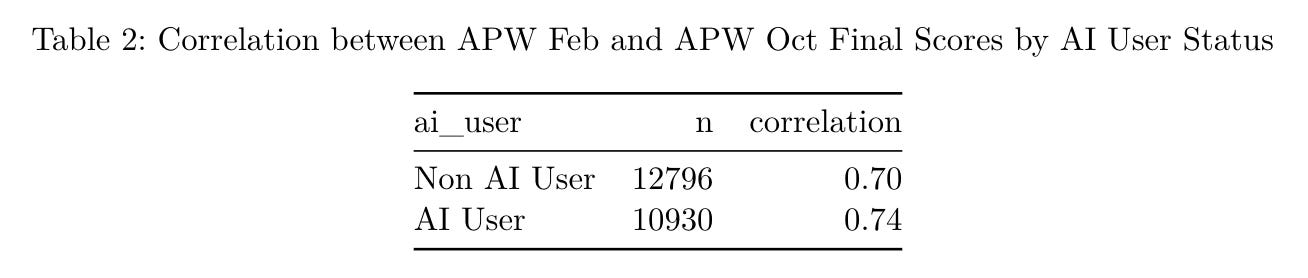

However, now we are using Comparative Judgement, we are seeing much higher correlations. Approximately 23,000 students who took part in this term’s Year 3 assessment also took part in a similar Year 2 assessment in February which was entirely judged by humans. We could therefore measure the correlation between the Feb Y2 assessment and the Oct Y3 assessment. We found that the October Y3 human and AI judges both achieved high correlations with the Feb Y2 assessment. (This of course is another data point showing that the AI is as good as humans).

AI can judge subjects other than English Language

We have just completed our first nationally standardised history assessment. 25 schools and just over 4,000 students took part. In the past we have had lots of schools use our platform for history assessment, but we’ve never run a nationally standardised project, partly because there aren’t as many history teachers as English teachers and this makes judging quite time consuming.

Adding in AI judges dramatically reduced the time it took to judge. On average, each teacher in the project judged for just under 20 minutes - which is what we predicted. In return, they got 7 PDFs with incredibly detailed data and feedback.

Was the AI good at judging more complex essays where the focus is not just on writing but on subject content too? The AI agreed with the human decisions 77% of the time. This is slightly lower than the 85% we typically get for writing assessments, but it’s still not bad. Our initial feedback from schools is that the results made sense.

We also have hundreds of schools using our platform to run custom AI assessments in a whole range of subjects. Custom assessments use all of our AI features, but they are customised to an individual’s schools curriculum & calendar and aren’t nationally standardised. It is early days, but so far the approach seems to be working well for all these other subjects too.

If you would like to learn more, our next introduction webinar is in January.

Even if the AI does get better at absolute judgement, there are still problems. It’s hard to use human oversight to validate this approach, and there is no statistical model underneath it - which is a problem given that most grades involve a significant statistical element (eg in England about 2.5% of students get a grade 9 in GCSE English Language).

Are you working with any school systems in the US yet?

What does APW mean from Table 2?